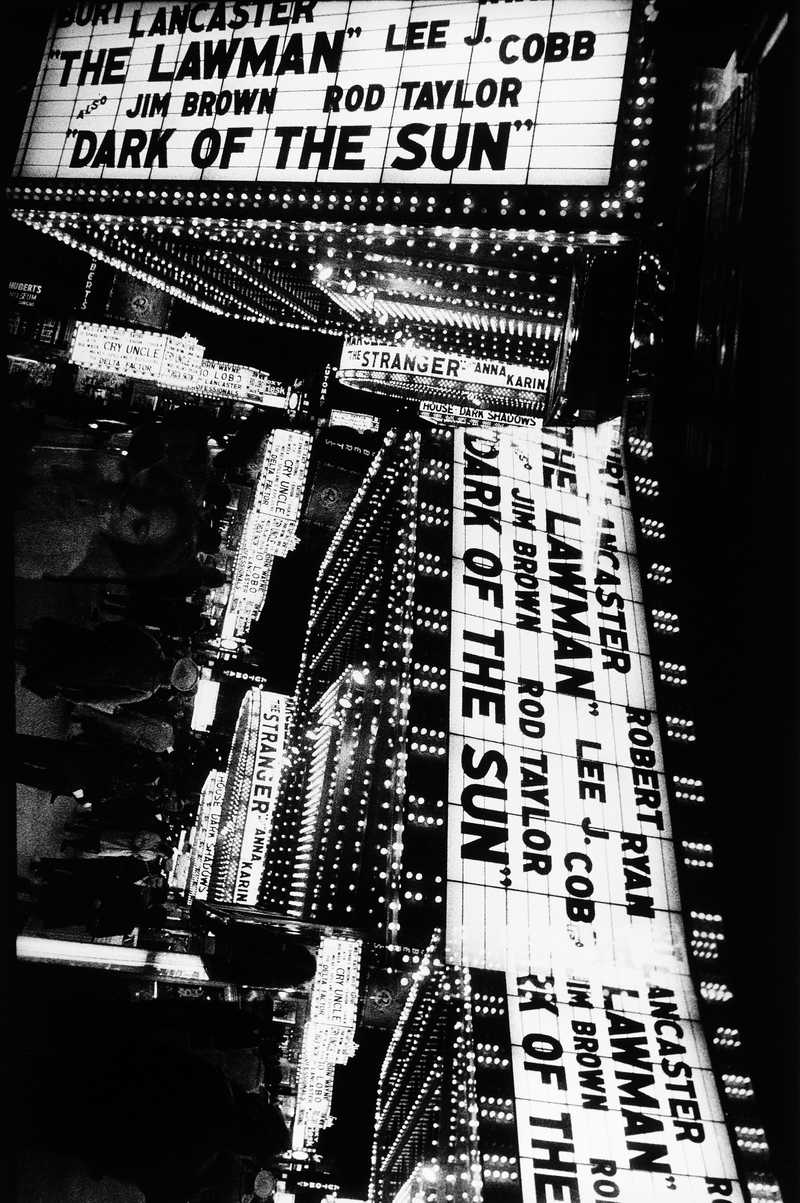

I'm currently obsessed with Daido Moriyama’s work with the half-frame camera1. Leveraging one piece of film for two exposures contributes to an agressive, pushed look. But for me, the most interesting change is one from shot to –> sequence.

By presenting two events at once, the viewer is forced to consider the temporal and spatial juxtaposition of subsequent images.

I thought it might be interesting to explore something similar with stereo algorithmic music[^TM]. Performing different contexts simultaneously, bringing them in and out of dialogue with one another, exploring their (inter)subjectivity, and questioning the absurdity of context itself.

Here are some ideas with two algorithms.

Left is essentially @p__meyer‘s intra patch.

Right is a variant of that which I made to implement Bresenham’s line algorithm in real-time.

They’re being fed the exact same information, and controlling two instances of an FM synth (operator). What’s fun is that they are inverted - as intra becomes denser, the bresenhamians become sparser. Also feeding their position in the bar to pitch, and modulating their time unit with sine waves.

They’re sometimes consonant, sometimes dissonant. Always fun, in any case.